This story has been updated. A new version can be found here.

We’ve all heard some version of the phrase: we learn in different ways. American cartoonist George Evans’ famous quote, “every student can learn, just not on the same day or in the same way,” is still printed and posted as a motivational phrase today. While this subjectivity may seem like a socially acceptable notion, it does not jive well with the politics of higher education.

Right now, college students around the nation are receiving notifications from their universities to complete their Student Evaluations of Teaching Effectiveness. These anonymous surveys, referred to as SETEs, act as a feedback tool for students to rate the effectiveness of their teachers. They are commonly put into the personnel files of faculty and used for employment decisions. Earlier this year, San Francisco State University Faculty Association passed a resolution to abolish the current SETE criteria. It is one of many universities joining the movement to reform student evaluations.

Gabriela Weaver, professor of chemistry at University of Massachusetts Amherst, began teaching when she was 27 years old. As a petite woman, she noticed right away certain types of comments that she found surprising, such as remarks about her clothing and a sense that students felt like she should be “mothering” them instead of teaching them.

Weaver has been teaching for nearly 30 years now and is part of the movement to reform these surveys. When she came to UMass, she became the Director for the Center for Teaching and Learning, where she is the institutional representative for Bayview Alliance (BVA), a network of research universities.

At her first BVA meeting, she had the opportunity to propose a project that institutional partners might be interested in.

“I proposed two different things,” Weaver said. “The one about teaching evaluation piqued the interest of several people there.”

That, Weaver said, was the beginning of the partnership among UMass, the University of Colorado Boulder, and the University of Kansas. Their research around student evaluations, titled the TEval Project (Transforming the Evaluation of Teaching) was funded by the National Science Foundation in 2017.

Phoebe Young, a professor at CU Boulder, said she noticed pretty early on that there was something problematic about student evaluations.

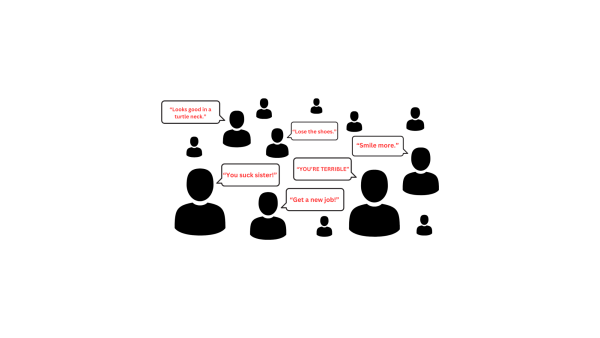

“Every female faculty I know, for example, and this happened to me the first semester I got teaching evaluations, gets a note on it [student evaluations],” Young stated.

“It says smile more,” Young said. “So that sort of suggests that there’s something weird going on with how students are being asked to evaluate their own experience, right? So it didn’t make me think that they were worthless, but it made me think, okay, you sort of have to take them with a grain of salt.”

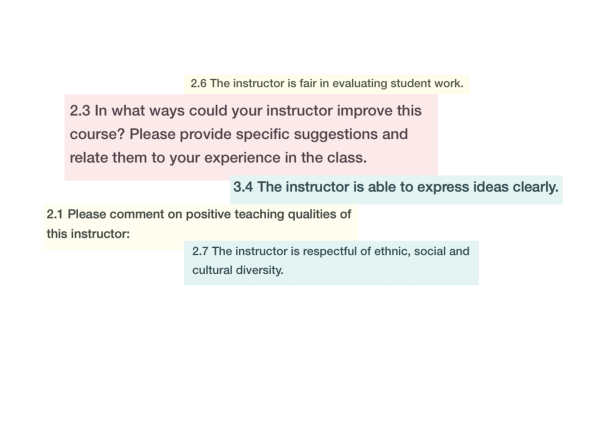

Since CU Boulder’s involvement in the movement, many things have changed, including the kinds of questions put into evaluations. When Young began teaching at the university in 2009, questions were very broad, such as “rate the professor overall,” or “rate the course overall.” Now, they aim at gauging the personal learning experience of students.

The University of Southern California has also transformed their student evaluation process.

Morgan Polikoff, professor of education at USC, is on the Rossier Committee that changed the way his department uses these surveys. Similar to CU Boulder’s reform, he said the department has weeded out questions that contain language asking the student to rate the course or professor “overall.”

Additionally, the university longer reports the quantitative data from student surveys in tenure and promotion cases, according to Polikoff.

“Now, that said, when we’re evaluating people, we have the evaluations in front of us, and we can go and look at them,” Polikoff said. “And it’s possible that the numbers might influence the way that people think about, you know, whether someone’s a good teacher or a bad teacher or something like that. But we do not use the numbers in any formal reporting, and I would say we’re discouraged from relying on the numbers.”

The accuracy of the data has been a point of criticism in the movement to reform evaluations. Philip B. Stark, a UC Berkeley statistics professor, has published research on the statistical flaws behind student evaluation systems.

“I started to look at student evaluations seriously as a researcher,” Stark said. “It was sort of obvious from the beginning that they don’t measure what they claim to measure. And that’s reflected in student comments as much as anything else, that they comment on things that have nothing to do with the quality of instruction or learning or anything else.”

When Stark became chair of his department 10 years ago, he became responsible for promotion cases. It especially bothered him, he said, that the university was relying on a system that did not have a lot of empirical support.

Stark published his research with Richard Freishtat, former Center for Teaching and Learning Director. Their research garnered attention, and Stark has since been invited to give advice to a number of universities around the U.S. and Canada.

“One of the statistical fallacies that many studies, many observational studies of student evaluations commit, is to say, ‘oh, we looked at the average scores of female instructors and male instructors, and they’re not very different. Therefore, there’s no gender bias,’” Stark said.

But that, Stark said, is asking the wrong question.

“What you need to know is if you compare equally effective instructors or instructors who are doing the same thing, do they get the same rating, regardless of gender?” Stark stated.

Stark’s research has been cited in many arguments to reform student evaluations, including SFSU’s Faculty Association’s resolution to remove SETEs from faculty personnel files.

Wei Ming Dariotis, professor emerita of Asian American Studies at SF State, helped author the Faculty Association’s resolution. One of her major reform ideas for student evaluations, she said, came to her one night as an epiphany: nowhere in teaching effectiveness assessment can she find the mission of the institution aligned with the assessment.

“So I had this epiphany that we should be asking, how does this course contribute to the mission of the institution and SF State’s mission statement?” Dariotis said, pointing out that SFSU’s mission statement aligns with social justice.

It occurred to her, Dariotis said, that the existing student evaluation system does not allow for — and in fact, punishes — faculty pedagogical innovation. In other words, she said, faculty are expected to already know how to teach. And then they’re not given any room to learn new things.

This led to Dariotis creating a task force on teaching effectiveness assessment to reform evaluations.

Dariotis’ team applied for a grant with the Spencer Family Foundation to do a larger scale study, but were not able to secure it. The resolution she helped draft has passed the Faculty Union and will go to the statewide union for a vote in the assembly.

There is widespread agreement by scholars, namely those mentioned in this article, that student feedback should not be abolished. However, there is also widespread agreement that the current system is flawed at best. There are high stakes in a survey that is meant to be taken by students who vary in emotions and understanding of the questions at hand.