Nicole Gonzales (they/them) is the current online editor for Xpress Magazine. They are a fourth-year journalism student minoring in sociology and political...

April 22, 2022

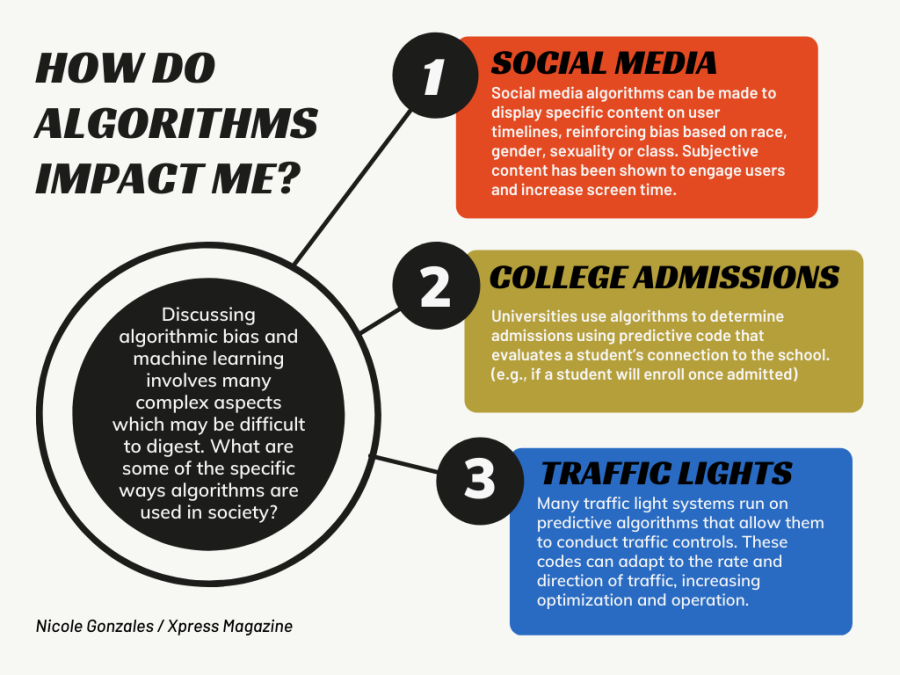

In the twenty-first century, virtually everything is processed or created through code and algorithms. From social media platforms and college applications to GPS routes, the ever-increasing presence of technology causes most people to interact with algorithms on a daily basis — whether they are aware of that or not.

A recent phenomenon, known as algorithmic bias, presents issues in machine learning and computer codes. While intelligent and useful, certain algorithms can pose prejudice and discrimination in the data they process, causing concern among computer scientists and technologists alike.

So — can technology be racist? How exactly does this impact society, and what can be done about it?

How algorithms work

Algorithms exist as a set of instructions that computers use to solve problems and execute tasks, explained Jesica Maldonado.

“We use algorithms to automate certain tasks that would take up a lot of time and space if they were done manually,” said Maldonado.

Maldonado works as the president and founder of EDiT at San Francisco State University. EDiT, Encouraging Diversity in Tech, is a program that highlights underrepresented voices in the computer science community at SF State by providing a monthly newsletter.

“Before an algorithm even runs, there’s got to be data that exists, and that is going to go into the algorithm. If your data is biased [or] you have bad data going into the algorithm, then you’re going to have bad outcomes coming out,” said Betsy Cooper, the founding director of the AspenTech Policy Hub, a program that trains technologists of all backgrounds on policy processes with machine learning. Cooper has also served as the founding executive director of the UC Berkeley Center for Long-Term Cybersecurity.

“It’s basically like you’re teaching your puppy how to do work,” said Cooper. “And then you train it so that it can succeed at doing the task you want it to do.”

What is algorithmic bias?

Within each step in the coding process, there is a potential for human faults to be replicated by the machines doing the work.

“First is the training data; that’s the data that you help the algorithm learn what it’s doing,” said Cooper. “If the training data is biased, then that bias is going to carry through in what the algorithm learns.”

Starting from a place of human discrimination can amplify that throughout the codes.

“For instance, let’s say that a company only hires white males into AI technologist jobs, then it’s very likely that the algorithm [will] learn that a good candidate for that job is going to be a white male,” said Cooper. “Then it might downgrade black female applicants on the assumption that that is a problem.”

Drawing from a batch of non-diverse data will limit the diversity of employees.

“[Another] type of bias is actually within the algorithm itself,” said Cooper. “If it was coded to add one plus two equals six, then every time you see one plus two, you’re going to get the wrong answer.”

In the 2020 peer-reviewed paper titled “Algorithmic bias: review, synthesis, and future research directions,” the authors looked into the nature of computer-driven decision making and its possibility to copy discrimination.

Published in the European Journal of Information Systems, technologists Nima Kordzadeh and Maryam Ghasemaghaei analyzed the connection between the codes and the biases that arise during their operation.

They found that “algorithmic systems can yield socially-biased outcomes, thereby compounding inequalities in the workplace and in society.”

Researchers assert that bias in this form is an emerging issue that is often overlooked by those in computer science fields.

“Despite the enormous value that AI and analytics technologies offer…algorithms may impose ethical risks at different levels of organizations and society,” they wrote. “A major ethical concern is that AI algorithms [are] likely to replicate and reinforce the biases that exist in society.”

A biased society will produce biased algorithms — further perpetuating prejudice and discrimination in social structures.

Notably, major companies and industries have begun using algorithms for large-scale decision-making. One of the most notorious uses is resume screening.

“If your pool of possible job candidates is biased, the algorithm can’t correct from that,” said Cooper. “Even if you’ve taught the algorithm that it shouldn’t take race into account, if there are no diverse candidates in the data that’s going in, then you’re going to see bias shown there as well.”

Examining resume screening results provided by coding is one of the most tangible forms of seeing the bias that can arise from algorithmic systems.

“When using resume screeners, potentially you may not notice that that resume screener is devaluing people whose last name represents a certain ethnic or racial background,” said Cooper.

The 2019 academic article “Artificial intelligence and algorithmic bias: implications for health systems” examines the presence and impact of machine operations in healthcare. Assessing the challenges, use and significance of algorithms in health systems, researchers pose the question — is AI safe in larger-scale societal systems?

In their findings, medical researchers state that when left unchecked or used without proper guidance, algorithms pose a greater threat to fair healthcare systems.

They explain that “the application of an algorithm [can] compound existing inequities in socioeconomic status, race, ethnic background, religion, gender, disability or sexual orientation.”

While AI and algorithmic systems have not fully replaced human intelligence or operations, they are becoming increasingly present. This presence raises worry for those in the computer science fields.

“People manually have to train these systems with data and then decide how that data is interpreted by the system,” said Maldonado of EDiT. “That determines how the system works.”

Essentially, there exists three main aspects to algorithms: data input, decision making and data output. At each point in the process, there is a possibility for biases to creep in.

“I think it’s very harmful that most people are unaware of this issue,” said Maldonado. “While there are people who realize that many systems are biased, they don’t realize that the issue begins at the hands of the people who create these algorithms.”

The concern shown by professionals in this field is also consistent with academic findings.

“AI is a potentially transformative tool,” said researchers of the 2019 article. “If bias exists in society, it will both manifest in systems and be represented in algorithms.”

The need for diversity in technology

“Achieving more diversity in tech is important to me because — as a queer brown woman — it has often been lonely to navigate academic and professional experiences,” said Maldonado. “We have to work to include people with different socioeconomic backgrounds, disabilities, immigration statuses and keep in mind how these backgrounds make a person’s experience in tech different.”

Introducing diverse ideas in tech will not only benefit computer scientists but will also improve our society at large, experts believe. Additionally, holding those in positions of power accountable streamlines solutions.

“I’ve seen and experienced oftentimes companies and organizations talk about including more queer and people of color in tech, but it is usually just for the statistics,” said Maldonado. “They never talk about why our participation and inclusion in tech is so essential and crucial for the benefit of our communities.”

Can the prejudice present in biased algorithms be corrected?

While technologists may be aware of complications within computer algorithms, correcting them is more complex than it may seem.

“By having diverse teams, you’re more likely to have people catch a lot of the problems that you might not see if you’re only considering the algorithm coming from your own perspective and background,” said Cooper.

The intricate essence of algorithms, fundamentally, makes this issue hard to combat.

“One reason that algorithms feel scarier is that it sort of spreads the burden across a number of different aspects of the algorithm problem,” said Cooper. “It’s harder to pinpoint where the bias is coming in. As a result of that, maybe it’s not as easy to figure out how to handle it.

The future of Algorithms

As society rapidly moves towards a technologically integrated day-to-day reality, it’s clear to those in the computer science realm that algorithms will continue to increase in use.

“It’s important to make people aware that increasingly in their day-to-day actions, even when they think they’re talking to a human, they may well be engaging with an algorithm,” said Cooper.

As the increased aid of machine learning spreads, technologists believe it’s important to educate and inform the broader public on how they are impacted.

“I think that we really need to work to expose to our communities how we can actually make a difference with code,” said Maldonado.

Maldonado emphasized the importance of moving forward with diversity in mind, “We have to especially dismantle the idea that coding and tech are only for a certain group of people.”

Looking at the future of algorithms, experts are also discussing how comfortable we may be with their presence.

“With the internet of things where everything we touch is digital, the more data is collected, the more algorithms are being used, and the more important it will become,” said Cooper. “So then the question for the rest of us is how comfortable are we with that? And how much are we paying attention?”

Nicole Gonzales (they/them) is the current online editor for Xpress Magazine. They are a fourth-year journalism student minoring in sociology and political...