Paint or Pixels? The Ongoing Battle Between Tradition and Tech

AI image generators and their place in the art world.

Image generated by the Midjourney AI bot, following the prompt: “AI robot.” Midjourney AI is know for it’s hyperrealistic image generation and ease of use.

From Twitter bots to robots making coffee, advancements in artificial intelligence have allowed for more and more technology to permeate into our daily lives. It’s difficult to point to a corner of the tech world that hasn’t been infiltrated — and in some cases, overrun — by artificial intelligence software. The use of AI in general can spark some fiery ethical debates, but the art world in particular has been debating the place AI-generated artwork has on the wall…if it even deserves one at all.

Public perception of AI a few decades ago generally centered on its potential to make daily life easier. It’s never been hard to imagine the typical utopian society with robot butlers and self-driving cars, but now that Tesla has revealed a working prototype of their “Optimus” humanoid robot, that idealistic future seems less and less far-fetched every day.

These rapid advancements in technology make AI tools more accessible than ever, just like how mobile phones went from something only the wealthy could afford to something that’s in almost everyone’s pocket. These AI tools are so accessible that Tiktok pages using them to fool their audiences have sprung up, introducing more people to this objectively interesting, somewhat frightening medium. The Apple and Google Play app stores host hundreds of applications that are capable of using primitive AI models to generate deep fakes, face swaps and more.

Now that these bots are at more people’s disposal, it’s no surprise that they have infiltrated the artworld. Multiple works of art created using artificial intelligence have been sold. “Portrait of Edmond Belamy,” created by French tech-and-research collective Obvious, sold for almost half a million dollars in 2018, and “Memories of Passersby I,” created by Mario Klingemann, sold for $50,000 in 2019. The purchase of these pieces received extensive media coverage and sparked global debates surrounding their artistic validity.

These debates seem to take place between artists who may not see the encroachment of AI into the art world as a potential threat, and others who view AI-produced artwork as incomparable to human artwork and a cause for concern.

According to Maddie Norkeliunas, an Art Academy University student, what sets human artwork apart from its AI counterpart is the human connection and work that goes into its production — something that AI couldn’t possibly hope to replicate any time soon.

“I guess, for me, I really liked the fact that when you have grown the abilities to do your art, you can fully see your ideas that you have in your head come to fruition, like really well. And that’s always something that’s really exciting,” said Norkeliunas as she explained what makes art special to her, and why a computer isn’t able to replicate that.

“I think that’s what really is the integrity of art. And that’s why people value human art a lot of the time, because it’s like, ‘oh shit,’ a fucking person did that…someone with their own eyes and hands…”

When it comes to generating images, there are a lot of AI image generators to choose from.

Dall-E mini is an AI model that is available to anyone with an internet connection. The model is a less sophisticated version of the Dall-E and Dall-E 2 models originally developed by the company, OpenAI, that have yet to be released to the public. The premise of the model is simple enough: Type a prompt into the generator, and the AI will generate a series of images attempting to match the description. That’s pretty much how every image generator on the market operates at the moment — but, huge variations between bots still exist.

Dall-E mini isn’t the first of its kind. In fact, there are many AI models online that accomplish the same task, albeit with varying degrees of effectiveness. It has, however, been one of the more popularized models, thanks to its pervasiveness on social media and general ease of use.

Although some may view the growing availability of tools like Dall-E mini as a threat to traditional forms of artwork, Paula L. Levine, a digital media & emerging technologies professor at SF State, acknowledges the place technology has already had in the art world.

“Every time calls for a particular language to respond to the circumstances that exist,” Levine said. “It’s not a threat to painting, it’s an iteration of responding to the circumstances now.”

Jasmine Mirzamani, a studio art and animation major at SF state, shared her general sentiment, explaining how it’d likely be more beneficial to embrace the new developments being made instead of fighting them.

“It’s the advancement of technology. Either join it, or keep painting and doing what you’re doing,” she said.

Mirzamani drew connections from AI image generators to “Miquela”, a CGI model and character often used by companies to promote brands across social media.

“She’s modeled for companies and stuff, but she’s not real. People are like ‘oh, you’re replacing jobs from real models’…but I think it’s fascinating,” Mirzamani said. “It just shows where we’re going and what the futures gonna be like. We just can’t stop it.”

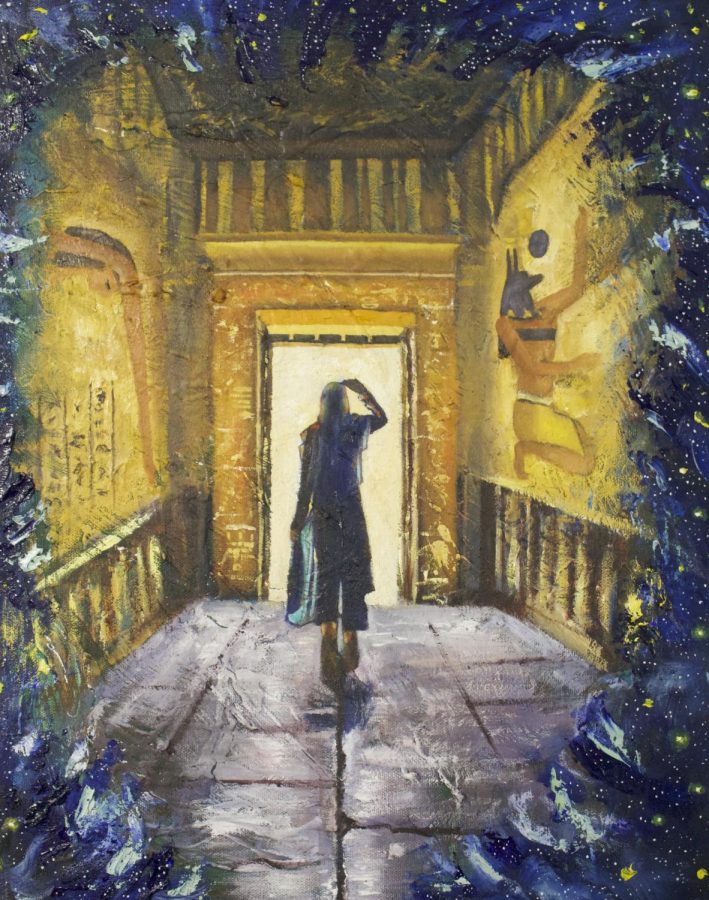

The following AI image creations were all created using MidJourney AI. The prompts inputted into the AI were based on general descriptions of the artwork made by Norkeliunas and Mirzamani, who both agreed to have their artwork compared to their AI counterparts.

These comparisons of real vs. AI artwork shows just how low the barrier of entry is for using some of these AI models, and gives readers an opportunity to consider the question: can art made by a computer really be considered art?

Other image generators have been created by independent developers over the years, and even more continue to be made as popularity and interest in the subject only increases.

So how exactly do these AI models work? The short answer is: it really depends.

When it comes to image-generating AI and machine learning in general, a Generative Adversarial Network (GAN) is often used, but it rarely encompasses every faction of development across companies and developers. In fact, some developers are pivoting to diffusion models after noticing greater success when it comes to generating more photorealistic images. This is why Dall-eE2 will use a diffusion model going forward, likely in an effort to prevent generated images from looking like something out of a horror movie, a problem that’s less likely to occur using a diffusion model.

Still in its beta-testing phase, Midjourney AI is another image generator that uses a diffusion model, which has helped earn it a solid reputation for creating incredibly realistic images that allow for further modification past their initial synthesis, something relatively unique to the bot. Although similar, unlike any Dall-E bot, this AI runs via discord server.

For more information and specifics on machine learning models and artificial intelligence in general, check out this video.

With so many other developers playing their cards close to their chest and keeping the specifics of their code away from the public, it’s safe to say everyone does things a little differently. When taking into account the growing competitiveness of the market and the huge differences in approach between companies, this isn’t too surprising.

That being said, no image generator can exist without using some sort of database where it pulls information that’s used to generate images. You can’t make a collage without a bunch of magazines and scrap paper, right? AI models like those mentioned above all have to learn what constitutes certain objects before they’re able to generate images of said objects of their own. The learning process takes place through custom algorithms made by developers, and it never really stops — which means we can only expect this technology to get better and better.

Eian Gil (he/him) is a journalism & political science student at SF state, currently serving as Xpress magazine’s editor-in-chief. Originally from...

![[From left to right] Joseph Escobedo, Mariana Del Toro, Oliver Elias Tinoco and Rogelio Cruz, Latinx Queer Club officers, introduce themselves to members in the meeting room on the second floor of the Cesar Chavez Student Center.](https://xpressmagazine.org/wp-content/uploads/2024/03/mag_theirown_DH_014-600x400.jpg)